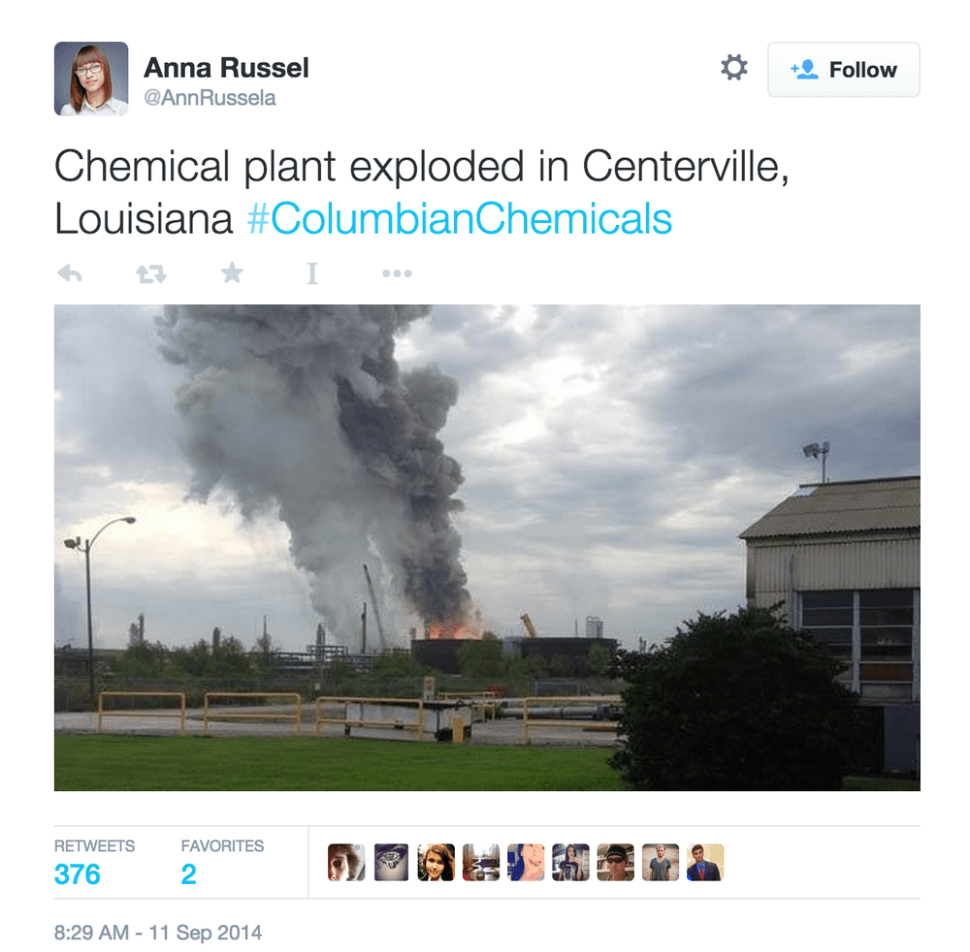

Gilad Lotan has spotted some pretty sophisticated fake-news generation, possibly from Russia, and possibly related to my weird, larval twitterbots, aimed at convincing you that ISIS had blown up a Louisiana chemical factory.

On September 11, 2014, Lotan, a data scientist, started researching a massive, coordinated, and failed hoax to create panic over an imaginary ISIS attack on a chemical plant in Centerville, Louisiana. The hoax included Twitter, Facebook and Wikipedia identities (some apparently human piloted, others clearly automated) that had painstakingly established themselves over more than a month. Also included: fake news stories, an imaginary media outlet called “Louisiana News,” and some fascinating hashtag trickery whereby a generic hashtag was built up in Russian Twitter by one set of bots, then, once trending, was handed over to a different set of English-language bots that used it to promote the hoax.

More interesting is the fact that the hoax failed. Lotan shows that Facebook’s Edgerank proved to be resistant to gaming using the process employed by the hoax’s creator(s); that Twitter clusters can be trumped by real news sources; and that Wikipedia’s vigilance was adequate to catching fakesters who create hoax pages.

Lotan has some important thoughts on the future of fake news, hoaxes and political manipulation. One important takeaway from Lotan’s analysis is that, despite the energy and technical sophistication of the attack, the hoaxer(s) made some dumb mistakes, like not giving their fake Wikipedian a richer, longer edit history; and not changing the sent-by string on their twitterbots (all the hoax tweets were sent by an app called “mass post” or “mass post2.”

Finally, I’m fascinated to see that the bot-tweets were sufaced into real Twitter by long-standing, still-extant, apparently human piloted accounts from Russian Twitter. Are @GelmutKol, @Kiborian and @Galtaca sleeper agents who carry on normal Twitter discourse for years at a time, but every now and again promote botnoise into real Twitter? Are they regular users whose compromised PCs (or stolen passwords) are used to push out messages every now and again? Or are they spectacularly subtle bots themselves, computationally intensive members of the botherd who pass among humans?

Did this stuff kick off in Russia? Or was it a false flag from non-Russians using Russian Twitter to point attention overseas? Or a Russian firm working for hire on behalf of foreigners? Why try to create gwot-panics on Sept 10? It’s head-spinningly futurismic.

There’s a very important lesson learned here, crystallized by the network graph to the left. No matter how much volume, how many tweets, or Facebook likes a campaign generates, if the messages aren’t embedded within existing networks of information flow, it will be very difficult for information to actually propagate. In the case of this hoax on Twitter, the malicious accounts are situated within a completely different network. So unless they attain follows from “real accounts,” they can scream as loud as they’d like, still no one will hear them. One way to bypass this is by getting your topic to trend on Twitter, increasing visibility significantly.Social networked spaces make it increasingly difficult for a bot or malicious account to look like a real person’s account. While a profile may look convincingly real — having a valid profile picture, posting human readable texts, and sharing interesting content — it is hard for them to fake their location within the network; it is hard to get real users to follow them. We can clearly see this in the image above: the community of Russian bots are completely disconnected from any other user interacting with the hashtag.

The same principle holds for Wikipedia, which is even harder to game as it is easy to identify those accounts who are not really connected to the larger editing community. The more time you spend making relevant edits and the more trusted your account becomes the more authority you gain. One can’t simply expect to appear, make minor edits on three pages, and then put up a page detailing a terror act without seeming suspicious.

As our information landscapes evolve over time, we’ll see more examples of ways in which people abuse and game these systems for the purpose of giving visibility and attention to their chosen topic. Yet as more of our information propagation mechanisms are embedded within networks, it will become harder for malicious and automated accounts to operate in disguise. Whoever ran this hoax was extremely thorough, yet still unable to hack the network and embed the hoax within a pre-existing community of real users.

Columbian Chemicals Hack [Gilad Lotan/In Beta]

(Thanks, Gilad!)