The theory of Big Data is that the numbers have an objective property that makes their revealed truth especially valuable; but as Kate Crawford points out, Big Data has inherent, lurking bias, because the datasets are the creation of fallible, biased humans. For example, the data-points on how people reacted to Hurricane Sandy mostly emanate from Manhattan, because that's where the highest concentration of people wealthy enough to own tweeting, data-emanating smartphones are. But more severely affected locations — Breezy Point, Coney Island and Rockaway — produced almost no data because they had fewer smartphones per capita, and the ones they had didn't work because their power and cellular networks failed first.

I wrote about this in 2012, when Google switched strategies for describing the way it arrived at its search-ranking. Prior to that, the company had described its ranking process as a mathematical one and told people who didn't like how they got ranked that the problem was their own, because the numbers didn't lie. After governments took this argument to heart and started ordering Google to change its search results — on the grounds that there's no free speech question if you're just ordering post-processing on the outcome of an equation — Google started commissioning law review articles explaining that the algorithms that determined search-rank were the outcome of an expressive, human, editorial process that deserved free speech protection.

While massive datasets may feel very abstract, they are intricately linked to physical place and human culture. And places, like people, have their own individual character and grain. For example, Boston has a problem with potholes, patching approximately 20,000 every year. To help allocate its resources efficiently, the City of Boston released the excellent StreetBump smartphone app, which draws on accelerometer and GPS data to help passively detect potholes, instantly reporting them to the city. While certainly a clever approach, StreetBump has a signal problem. People in lower income groups in the US are less likely to have smartphones, and this is particularly true of older residents, where smartphone penetration can be as low as 16%. For cities like Boston, this means that smartphone data sets are missing inputs from significant parts of the population — often those who have the fewest resources.

Fortunately Boston’s Office of New Urban Mechanics is aware of this problem, and works with a range of academics to take into account issues of equitable access and digital divides. But as we increasingly rely on big data’s numbers to speak for themselves, we risk misunderstanding the results and in turn misallocating important public resources. This could well have been the case had public health officials relied exclusively on Google Flu Trends, which mistakenly estimated that peak flu levels reached 11% of the US public this flu season, almost double the CDC’s estimate of about 6%. While Google will not comment on the reason for the overestimation, it seems likely that it was caused by the extensive media coverage of the flu season, creating a spike in search queries. Similarly, we can imagine the substantial problems if FEMA had relied solely upon tweets about Sandy to allocate disaster relief aid.

The Hidden Biases in Big Data [Kate Crawford/HBR]

(via O'Reilly Radar)

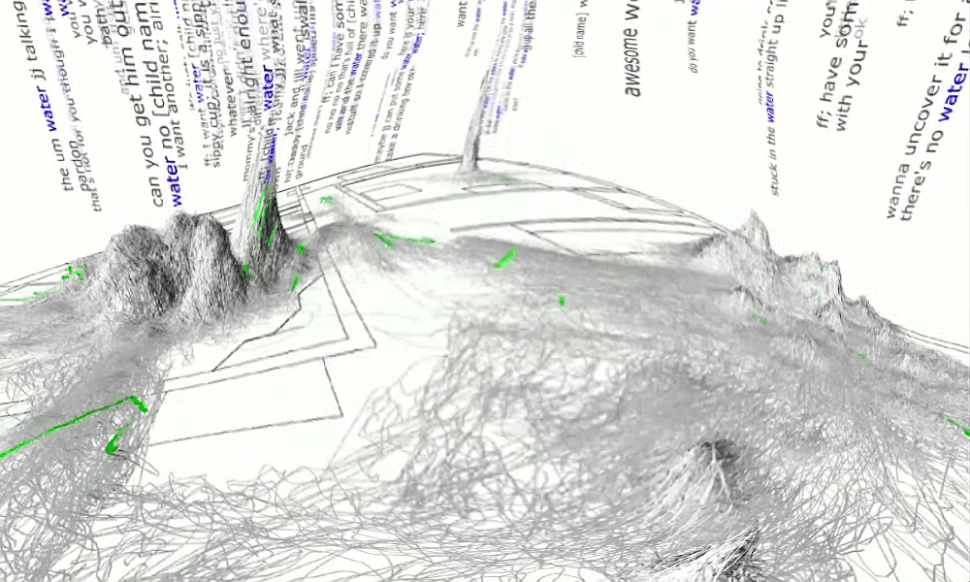

(Image: Big Data: water wordscape, Marius B, CC-BY)